LLM pretraining trends:

Comprehensive LLM Pertraining Trends & Best Practices

Table Of Contents

Introduction

The Evolution of LLM Pretraining

Current State of LLM Pretraining

Emerging Trends in Pretraining

Best Practices in LLM Pretraining

Ethical Considerations & Responsible AIIndustry Application & Case Studies

Future Directions in LLM Pretraining

Assessing & Improving Organizational AI Maturity

Conclusion

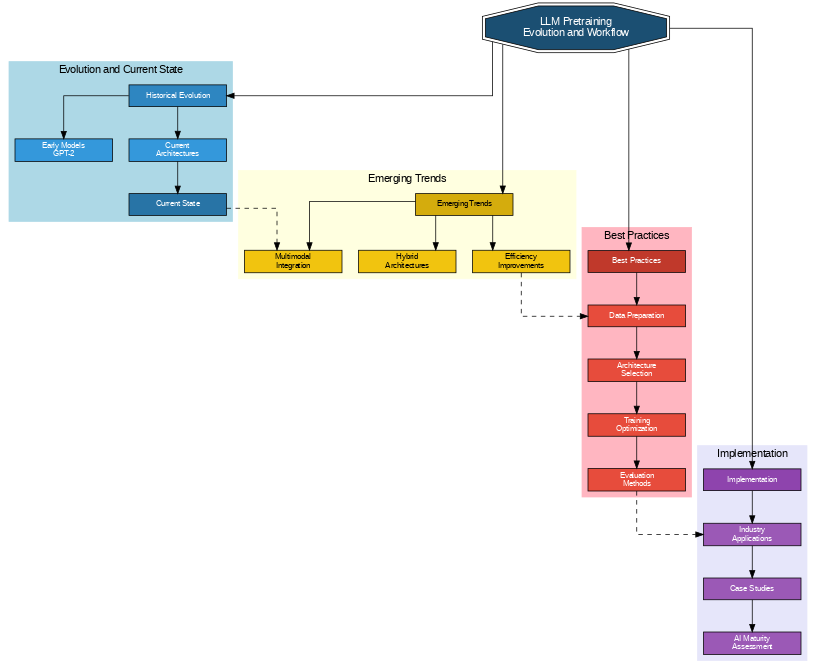

The emergence of Large Language Models (LLMs) represents one of the most significant technological breakthroughs in artificial intelligence, fundamentally transforming how we approach natural language processing (NLP) and human-computer interaction. From GPT’s debut in 2018 to today’s sophisticated models like GPT-4 and Claude, LLMs have evolved from interesting research projects into powerful tools driving innovation across industries.

At the heart of this revolution lies pretraining – a crucial process that enables these models to develop their remarkable capabilities through exposure to vast amounts of data. As organizations increasingly recognize the transformative potential of LLMs, understanding the nuances of pretraining becomes not just an academic exercise but a strategic imperative. This article explores the evolution, current state, and future directions of LLM pretraining, offering practical insights for organizations navigating the complex landscape of AI implementation.

1. Introduction

Brief history of LLMs and their impact on NLP

Importance of pretraining in LLM development

2. The Evolution of LLM Pretraining

Early models (e.g., GPT-2) to current architectures

Key milestones and breakthroughs

Changes in scale, efficiency, and ethical considerations

3. Current State of LLM Pretraining

Overview of dominant pretraining approaches

Comparison of major models and their pretraining strategies

Challenges and limitations in current pretraining methods

4. Emerging Trends in Pretraining

4.1 Multimodal Data Integration

- Combining text with images, audio, and video

- Benefits and challenges of multimodal pretraining4.2 Hybrid Architectures

- Introduction to models like JAMBA

- Advantages of hybrid approaches4.3 Efficiency Improvements

- Techniques for reducing computational resources

- Balancing model size and performance5. Best Practices in LLM Pretraining

5.1 Data Preparation and Curation

- Importance of high-quality, diverse datasets

- Ethical considerations in data collection5.2 Model Architecture Selection

- Factors to consider when choosing an architecture

- Tailoring architecture to specific use cases5.3 Training Process Optimization

- Hyperparameter tuning strategies

- Distributed training techniques5.4 Evaluation and Benchmarking

- Comprehensive testing methodologies

- Importance of diverse evaluation metrics6. Ethical Considerations and Responsible AI

Addressing bias in pretraining data and models

Ensuring transparency and explainability

Compliance with AI regulations and standards

7. Industry Applications and Case Studies

Examples of successful LLM implementations across sectors

Lessons learned from real-world applications

8. Future Directions in LLM Pretraining

Predicted advancements in the coming years

Potential paradigm shifts in pretraining approaches

9. Assessing and Improving Organizational AI Maturity

Framework for evaluating current AI capabilities

Strategies for gradual, tailored AI adoption and scaling

10. Conclusion

As we stand at the frontier of AI innovation, the landscape of LLM pretraining continues to evolve at an unprecedented pace. The journey from simple language models to today’s sophisticated multimodal systems underscores both the remarkable progress we’ve made and the challenges that lie ahead. The integration of diverse data types, the development of hybrid architectures, and the focus on efficient, ethical training practices are not just trends but fundamental shifts that will shape the future of AI development. Organizations must recognize that successful AI implementation requires more than just technical expertise – it demands a thoughtful approach to data curation, model selection, and ethical considerations.

Looking ahead, the key to success lies in maintaining a balance between innovation and responsibility. Organizations that can effectively navigate this balance, while staying adaptable to emerging trends and best practices, will be best positioned to harness the transformative power of LLMs. As we continue to push the boundaries of what’s possible with these models, the focus must remain on developing solutions that are not only powerful and efficient but also transparent, ethical, and aligned with human values. The future of LLM pretraining is not just about building bigger models – it’s about building better, more responsible ones that can truly serve the needs of society while advancing the field of artificial intelligence.

The article concludes with a call to action for organizations to take a proactive stance in their AI journey. This means staying informed about emerging trends, investing in robust AI governance frameworks, and fostering a culture of continuous learning and adaptation. In doing so, they can ensure they remain at the forefront of AI innovation while contributing to the responsible development of this transformative technology.

Meet Our Lead Instructors

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Duis ac eros ut dui bibendum ultricies. Maecenas egestas fringilla semper.

Number Speaks

10+

Awards

5+

Countries

12+

Partners

7K+

Students

What Our Students Have to Say